What is ETL and What can it do?

The abbreviation “ETL” stands for Extract-Transform-Load. It’s an essential data integration process to gather, process, and deliver data from diverse sources to a target system. Many integration platforms rely on ETL because it is so dynamic—It can support both structured and unstructured data in real time, whether it’s on-premises or in the cloud.

However, while ETL specifically focuses on the extraction, transformation, and loading of data, today’s integration tools often include a more diverse set of features.

For instance, StarfishETL is an Integration Platform as a Service (iPaaS) that offers a comprehensive suite of integration capabilities. These capabilities include ETL functionalities, but they also extend to support real-time integration, Application Programming Interface (API) management, and event-driven workflows.

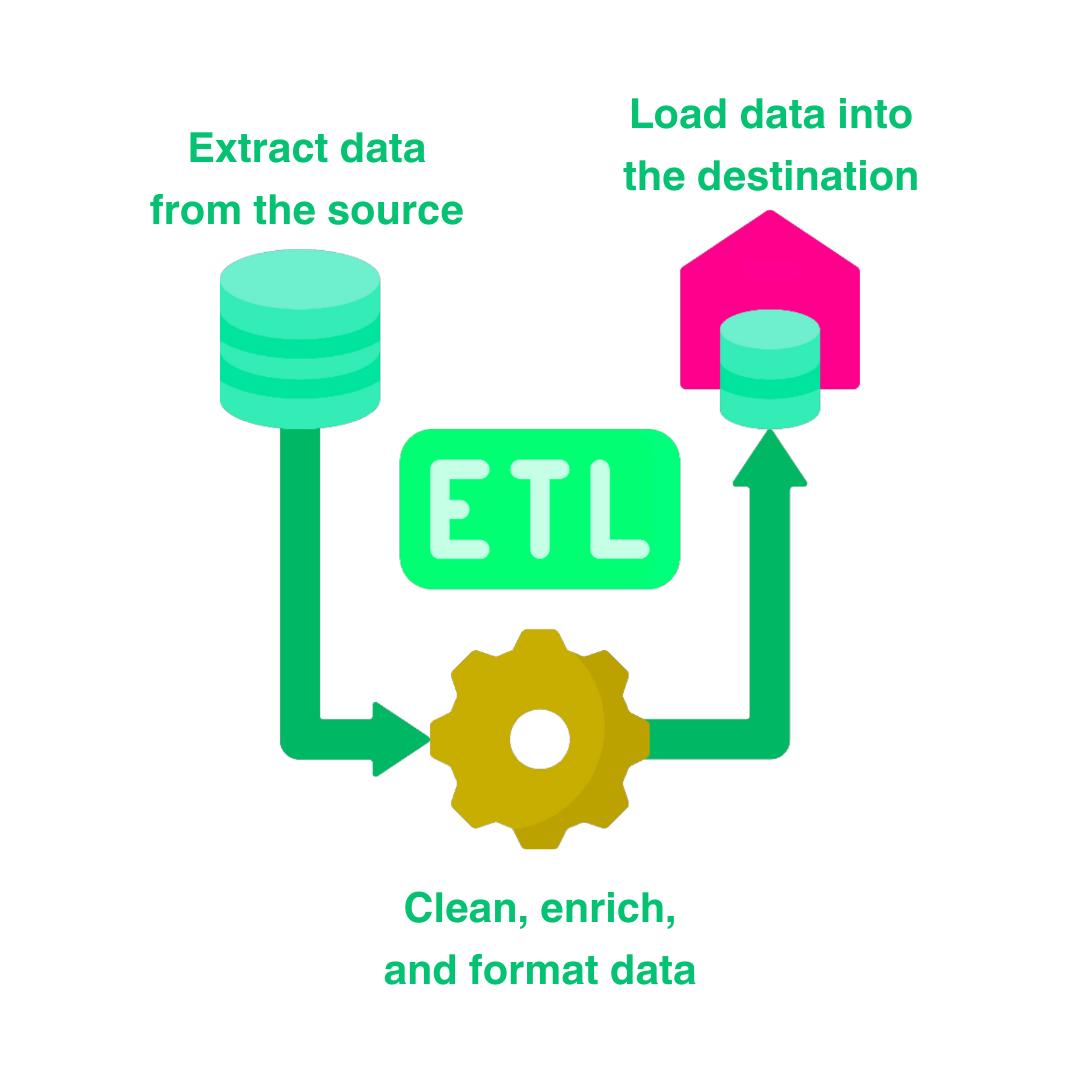

What exactly is meant by “Extract, Transform, Load”? How do each of these stages contribute to a seamless integration project? Here’s a quick breakdown.

At the core of ETL lies the extraction phase, where data is sourced from disparate origin systems. ETL tools excel in this domain, effortlessly retrieving data from sources online, on-premises, in legacy environments, and other locations. Once the ETL tool has extracted the information, the data is loaded into a staging area for transformation.

In the transformation stage of ETL, the extracted data is processed. These tasks include cleansing, enriching, and formatting the data into a structure that makes sense for the target database, data store, data warehouse, or data lake.

Depending on the use cases for the integration, data transformation may also include data quality and compliance audits, converting currencies or other units of measurement, editing text strings, or encrypting data governed by an industry or governmental regulators.

Once the data is clean and correctly formatted to the destination system, it’s time to load it into its destination. Integration is continuous, so after the initial load of all the data, automated processes continue to load data in increments over time. These subsequent data loads are scheduled at specific times or based on specific triggers. For example, if a CRM and marketing automation system are integrated, and a new lead is added to the CRM, that may trigger the integration workflow to push that lead into the marketing automation.

Oftentimes, businesses schedule these data updates in off-hours when traffic to the systems is lower, but that’s not always the case. Businesses that rely on up-to-date data (like manufacturers, who need real-time inventory updates) will opt to load their data changes in real-time. Whether the loading increments are scheduled or immediate, this phase is ongoing for the lifetime of the integration.

ETL is widely used for integration because it’s effective and efficient. Its ability to batch process, integrate in real-time, and scale exponentially make it particularly ideal.

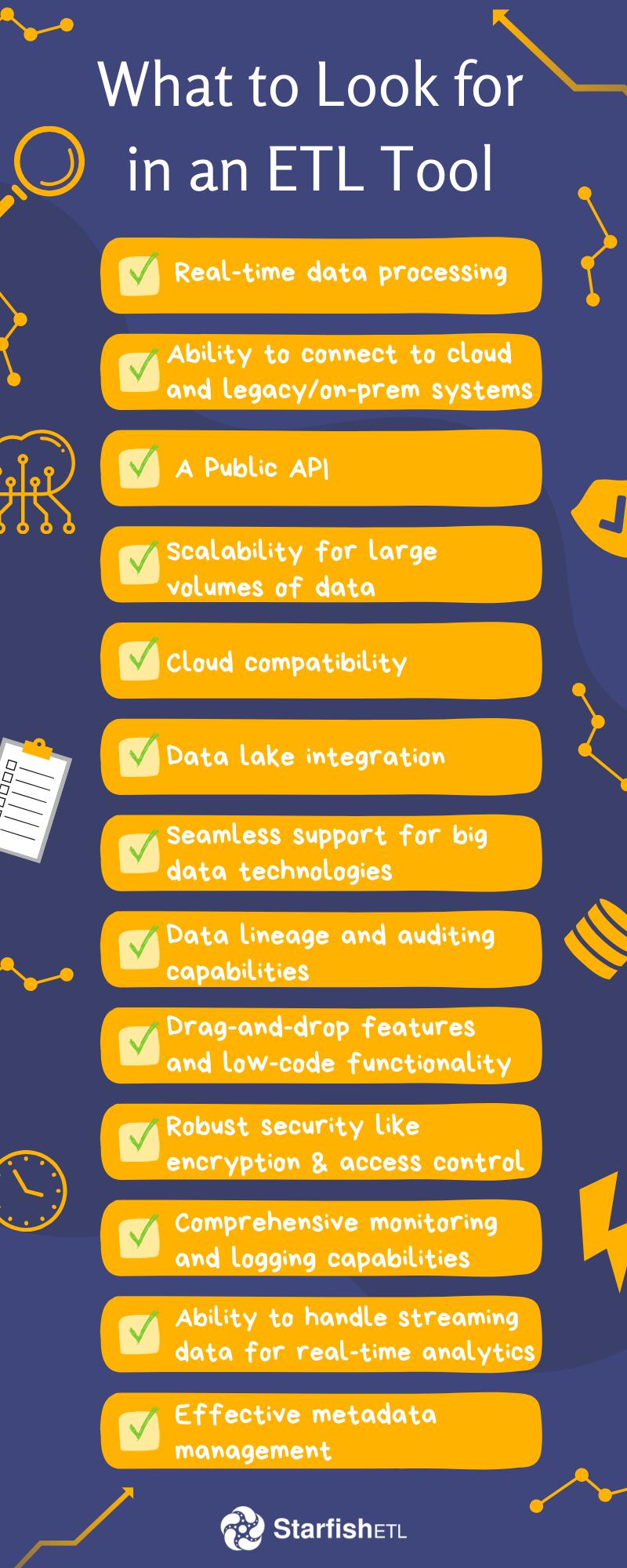

Perhaps this question should read “What should ETL do?”. ETL has evolved since it first emerged in the 1980s and 1990s. ETL was born because organizations started accumulating huge volumes of data from various sources and needed a systematic approach to integrating, cleaning, and organizing that data for analysis. Today, businesses are moving faster and using more data than ever, and ETL tools must offer capabilities that match the new business normal. So what should a modern ETL be able to do?

Modern ETL tools should not only excel in traditional batch processing but also align with the evolving landscape of data integration, supporting real-time processing, cloud environments, big data technologies, and ensuring data quality, security, and flexibility. To learn more about the usability of ETL for your next integration, reach out to our expert team at sales@starfishetl.com.

Fill out the form below and we will contact you